Expert Guide to Robots.txt for SEO Success

Robots txt Optimization for Technical SEO Mastery

Robots txt Optimization for Technical SEO Mastery. Navigating the nuances of technical SEO can often feel like a game of chess against an unseen algorithm, but gaining a firm grasp of robots.txt optimization can turn the tide in your favor.

A well-structured robots.txt file is not just a set of instructions for web crawlers; consider it a backstage pass, allowing googlebot to spotlight the content you want to rank for while sidestepping areas that aren’t display-worthy. Website owners benefit from an xml sitemap, search engine ranking, and customer experience.

Striking the right balance within your robots.txt can transform how search engines interact with your site, paving the way for higher visibility and improved user experiences.

Keep reading as I unravel the essential strategies to leverage robots.txt for superior SEO performance.

Key Takeaways

- Understanding robots.txt Optimization Is Crucial for Directing Search Engine Behavior

- Proper Use of Allow and Disallow Directives Influences a Site’s Visibility and Rankings

- Regular Updates and Audits of robots.txt Are Necessary to Reflect Website Changes and SEO Strategies

- Strategic Inclusion of Sitemap References in robots.txt Can Improve Crawl Efficiency

- Constant Monitoring and Adjusting of robots.txt Directives Ensure Sustained SEO Success

Understanding the Basics of Robots.txt for SEO

Embarking on the journey of optimizing a website for better search engine visibility, one encounters the unassuming yet powerful force of the robots.txt file.

This might seem like a minor detail against the backdrop of intricate code and sophisticated algorithms, but make no mistake, mastering robots.txt is essential for directing search engine crawlers like Googlebot effectively.

Beyond mere access, it’s about inviting these digital explorers to focus on the content that elevates your site’s relevance and authority.

As I peel back the layers of technical SEO, revealing insights into how these engines interact with robots.txt files will be a game-changer for anyone keen on stepping up their SEO strategy.

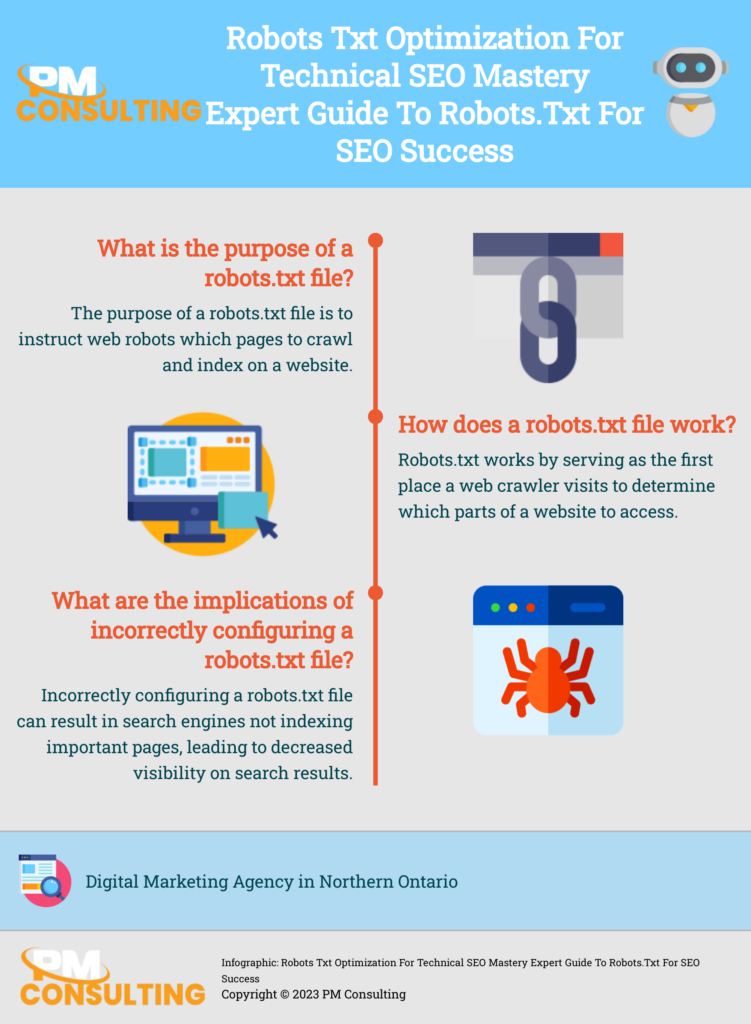

What Is Robots.txt and Why It’s Crucial for SEO

My exploration of technical SEO has led me to a profound appreciation for the role of robots.txt files. These text files are uncomplicated by design, yet they wield immense power in guiding search engine crawlers, like Googlebot, through your digital domain, assuring that they index the content you want to highlight while avoiding the areas you don’t. Having an effective xml sitemap is essential to improve search result.

Comprehending the mechanics of robots.txt is not just about preventing search engines from accessing particular parts of a site; it’s about crafting a strategic map that enhances a site’s visibility and ranking potential by emphasizing valuable content. It’s akin to laying out a welcome mat for bots and providing them with a navigational handbook: xml sitemap

| Element of Robots.txt | Significance |

|---|---|

| User-agent | Identifies which crawler the instructions are for |

| Disallow | Commands crawlers not to index specified paths or files |

| Allow | Grants permission for crawlers to index specified content, usually within a disallowed directory |

| Sitemap | Directs crawlers to a sitemap for efficient navigation and indexing |

How Search Engines Interact With Robots.txt Files

My dedication to the intricacies of robots.txt files takes me to the heart of SEO—how search engines scrutinize these simple documents. When a crawler arrives at your virtual doorstep, it immediately seeks out the robots.txt file, using it as a guide to understand which areas are open for inspection and which are off-limits, thus preventing the wastage of valuable crawl budget on irrelevant sections. Additionally, XML sitemap plays a crucial role in enhancing search engine ranking and providing a better user experience.

It’s important to note that sticklers for rules they are, Googlebot disallow spiders heed the directives you set forth in robots.txt, but not without some caveats. While major search engines like Google honor these standards, some may interpret the instructions slightly differently. This variation underscores the need for precise optimization of the robots.txt file, ensuring universal comprehension among different crawlers and preventing unforeseen indexing issues. Marketers should also consider including an XML sitemap in their SEO strategy to improve search engine ranking and enhance user experience.

| Search Engine Behavior | Action Upon Finding Robots.txt |

|---|---|

| Initial Encounter | Searches for robots.txt to determine accessible and restricted areas |

| Rule Adherence | Follows specified directives but interpretations may vary across different engines |

Crafting the Perfect Robots.txt File for Your Website

Embarking on structuring the ideal robots.txt can feel akin to charting a map for treasure seekers, where the treasure is your content and the seekers are search engines’ crawlers.

Navigating best practices and averting pitfalls in the composition of your robots.txt is an art and science that demands attention.

My purpose is to share effectively proven strategies for formatting and structuring your robots.txt file to enhance your SEO efforts, alongside highlighting common missteps you may encounter.

This guidance is not about creating an infallible script; it’s about tailoring a directive that aligns with your website’s unique landscape and search engines’ expectations.

Best Practices in Formatting and Structuring Your Robots.txt

Responsible SEO practice begins with correctly formatting and structuring a robots.txt file. A minor typo or misconfiguration could send the wrong signals to the crawling bots, potentially leading to significant visibility issues for your website: That’s why attention to detail is critical.

| Formatting Aspect | Importance |

|---|---|

| Case Sensitivity | Recognize that directives are case sensitive, ensuring accuracy in the file paths. |

| Clear Directives | Use explicit ‘Allow’ and ‘Disallow’ instructions to prevent ambiguity for crawlers. |

| Syntax Precision | Adhere strictly to the recognized syntax to avoid misunderstandings by bots. |

My emphasis is always on clarity when conveying instructions to web crawlers. Each command within the robots.txt file acts as a concise order, guiding engines through the digital landscape while avoiding unnecessary detours into sections not meant for public consumption or indexation. XML sitemap is always a crucial aspect of improving search engine ranking.

Common Mistakes to Avoid When Writing a Robots.txt File

Engaging with the nuances of robots.txt, I have observed certain common slip-ups that can sabotage SEO efforts. One major mistake is an indiscriminate use of the’Disallow’ directive, which, if not properly specified, can block search engine access to important areas of your site unintentionally. Search engine bots, such as Googlebot, may be affected by this issue.

Another critical error often spotted is neglecting to update the robots.txt file when a website undergoes redesign or migration. Since the structure and content of a website can drastically change, the old directives can lead to crawl errors and omissions in indexing, impacting SEO performance negatively. Additionally, it is important to include an xml sitemap to enhance search result visibility, improve user experience, and optimize search engine ranking:

| Mistake | Potential SEO Impact |

|---|---|

| Overuse of ‘Disallow’ | Hinders the crawling of essential content, reducing site visibility. |

| Stale Robots.txt Directives | Leads to crawl errors and missing pages in indexation after site changes. |

Remaining vigilant in the upkeep of your robots.txt file is paramount. As I chart my course in technical SEO, I strive to ensure that such oversights are corrected, thereby optimizing the crawlability and overall search potency of the websites I manage with the help of xml sitemap.

Analyzing the Impact of Disallow Directives on SEO

Steering the colossal ship that is search engine optimization requires not only a keen understanding of the elements that boost your online presence but also the finesse to mitigate any actions that might curtail it. Technical SEO strategies play a vital role in ensuring search engine bots, like Googlebot, properly crawl and index your web page and contribute to your search engine ranking.

As I venture deeper into the granularity of technical SEO, the spotlight often turns to the use of ‘Disallow’ directives within the robots.txt file.

These directives carry the potential to either enhance or impede a site’s SEO health, depending on their application.

Addressing the dual imperative of strategic employment and diligent refinement, my focus here is to unravel the nuances of Disallow commands, ensuring they safeguard the site’s integrity while fostering its growth in the digital ecosystem.

How to Use Disallow Directives Without Harming SEO

Mastering the art of employing Disallow directives comes with a recognition of their potentially double-edged nature. To wield Disallow commands effectively, I apply them judiciously, ensuring they block only the areas of a website that contain duplicate content, private data, or are under development, thus maintaining the site’s SEO integrity without inadvertently concealing quality content from search engine’s scrutiny. For proper visibility in search result s, it is essential to consider the behavior of search engine bots, such as Googlebot, when implementing Disallow rules.

Detailed awareness of the website’s structure informs my Googlebot Disallow decisions, as I carefully select the pages or directories to exclude. This precision prevents the unintentional omission of valuable pages from search engine indices, reinforcing the website’s SEO framework while optimizing the overall user experience for both bots and visitors alike. The xml sitemap is an essential tool for improving search result visibility.

Testing and Modifying Disallow Rules Effectively

Testing and adjusting Disallow directives in robots.txt files ensures their effectiveness and preserves SEO health. I utilize tools like Google Search Console’s robots.txt Tester to simulate how Googlebot interprets the file, tweaking the directives based on the feedback to prevent potential dips in search performance and maintain seamless site accessibility for crawlers.

Upon implementing changes, it’s imperative to observe their impact meticulously. I analyze site traffic and indexation patterns, making refinements to the rules as necessary. Continuous vigilance in this process guarantees that my Disallow directives contribute positively to the site’s SEO strategy rather than detracting from it.

Leveraging Allow Directives to Enhance Indexing

Turning our attention to the strategic use of Allow directives in a robots.txt file offers us a powerful way to signal search engines towards the content we deem essential for indexing.

This is where the finesse of SEO craftsmanship becomes apparent, as the astute application of these directives ensures our prime content surfaces in search engine results.

Step by step, I will unravel methodologies to seamlessly include the content that needs to be crawled, and propose strategies to strike the right balance between what to show and what to shield using Allow and Disallow commands, thus sculpting a pathway to SEO triumph.

Steps to Include Content You Want Search Engines to Crawl

My methodical approach to SEO involves specifying which pages search engines should prioritize. To achieve this, I intentionally frame Allow directives within the robots.txt to pinpoint the content most deserving of Googlebot’s attention. This practice is analogous to shining a spotlight on the stage of a theater—ensuring the best parts of the show are seen by the audience. Including an xml sitemap enhances the visibility of the web page, benefiting search engine optimization efforts and improving user experience.

Effectively managing a robots.txt file, I integrate Allow commands to complement the broader landscape of SEO tactics. They’re vitally employed to clarify intentions when broader Disallow rules might leave valuable content in the shadows, thereby ensuring that search engines uncover and value the core assets of the site. XML sitemap is crucial for search engines to navigate and understand the website’s structure.

Strategies for Balancing Allow and Disallow Commands

In my day-to-day optimization tasks, striking the perfect equilibrium between Allow and Disallow commands for Googlebot feels akin to orchestrating a symphony where each note must be played at the right time for harmony. My strategy hinges on deploying Disallow instructions with precision to shield non-essential or sensitive areas without cluttering the bot’s path, while concurrently setting Allow directives that single out content-rich pages that merit swift indexing and higher search scores.

Over the years, I’ve honed a keen sense for the delicate balancing act that these commands require. Careful analysis of site structure and content importance helps me draft a robots.txt that grants googlebot unhindered access to the rich, engaging material that serves the user, while skillfully sidestepping the backend processes and duplicate content that can muddle search engine insights and efficacy.

Getting More Advanced With Sitemap References in Robots.txt

Embarking on the subtle art of robots.txt optimization introduces a vital component often overlooked: the strategic inclusion of sitemap references.

A sitemap acts as a comprehensive chart of a website’s layout, offering search engines a streamlined path to all the content available.

Integrating this element within the robots.txt not only boosts crawl efficiency but also aids in revealing the full breadth of your site to search crawlers.

My focus shifts to embedding sitemap locations properly and debugging typical sitemap-related conundrums that may impede the robots.txt from functioning at its best.

Integrating Sitemap Locations Into Your Robots.txt File

The strategic incorporation of sitemap references within a robots.txt file is undeniably a masterstroke in enhancing SEO. By including the exact locations of xml sitemap, I ensure that search engine crawlers, such as googlebot, are equipped with a comprehensive blueprint of the website’s architecture: This facilitates an efficient and thorough examination of the site’s content, allowing for more effective crawling and indexing.

The meticulous process of embedding xml sitemap references into robots.txt warrants precision and an understanding of the synergy between crawlers and sitemaps. Directing bots to these resources is a clear invitation to explore and understand the full structure of the website, spotlighting all the valuable content that awaits their discovery:

| Robots.txt Element | Purpose |

|---|---|

| Sitemap Reference | Guides crawlers to the website’s sitemap for expedited and comprehensive indexing. |

Troubleshooting Common Issues With Sitemaps and Robot..txt

Navigating the challenges associated with xml sitemap s and robots.txt is an integral part of my SEO expertise. Occasionally, sitemaps referenced in robots.txt are inaccessible due to incorrect URLs or because they are mistakenly disallowed by a command in the file, which can result in crawlers missing crucial information they need to index the site effectively.

My approach involves consistent monitoring and validation of xml sitemap links within the robots.txt. I perform checks to ensure that the sitemaps are not only correctly formatted but also compatible with the guidelines set out by search engines, rectifying any errors swiftly to guarantee the smooth indexing that underpins SEO success.

Maintaining an Optimized Robotstxt Over Time

An optimized robots.txt is not a set-it-and-forget-it tool; it demands consistent attention and updates to remain effective. My practice involves regular audits of the robots.txt file, xml sitemap to ensure alignment with the current website structure and search engine requirements. It’s critical to adapt to new pages, site sections, and digital assets that must be correctly reflected in the file.

Seasonal changes and marketing campaigns also prompt revisions in robots.txt. I proactively tweak directives to accommodate temporary pages or promotions, safeguarding their search visibility without disrupting the established SEO hierarchy. This ensures that every initiative has the opportunity to contribute positively to overall site performance.

Technological advancements and changes in search engine algorithms necessitate vigilance. I stay abreast of these developments, revising robots.txt and xml sitemap to leverage new opportunities for optimization or to address potential vulnerabilities that could hinder site indexing or rankings. My goal is to secure and maintain the SEO foothold for the sites I manage.

A truly optimized robots.txt with Googlebot disallow evolves in tandem with the digital environment it exists in. I integrate feedback from analytic tools and search performance data, refining the file to better serve the needs of both users and search engines. Through informed adjustments, I ensure that the robots.txt file remains an asset, enhancing a website’s SEO landscape over time. Additionally, a well-implemented xml sitemap can further improve search engine ranking and user experience.

Frequently Asked Questions

What is Robots.txt and How Does it Impact SEO?

A robots.txt file is a text file that webmasters use to instruct search engine bots (typically Googlebot) about how to crawl pages on their website. This file is crucial for SEO as it helps you control which parts of your site should be indexed by search engines. Proper use of robots.txt can prevent search engines from accessing duplicate content, private areas, or certain files and directories that don’t contribute to your SEO efforts.

Best Practices for Setting Up Robots.txt

Key best practices include:

- Disallowing Irrelevant Sections: Prevent search engines from indexing irrelevant sections like admin areas.

- Allowing Important Content: Ensure that search engines can access and index your primary content.

- Using Crawl Delay Wisely: Use crawl delay directives sparingly, as they can hinder search engine access.

- Regular Updates: Regularly review and update your robots.txt to align with changes in your site structure and content strategy.

- Avoid Blocking CSS/JS Files: Don’t block CSS and JavaScript files, as search engines need these to render your pages correctly.

Common Mistakes in Robots.txt Files

A: Common mistakes include:

- Blocking Important Content: Accidentally disallowing search engines from indexing important pages.

- Syntax Errors: Using incorrect syntax which can lead to unintended blocking.

- Overuse of Disallow: Excessively disallowing content can limit your site’s visibility.

- Ignoring Crawl Budget: Not considering crawl budget, leading to inefficient use of search engine resources.

Robots.txt vs. Meta Robots Tag

A: Robots.txt is used for controlling crawler access to entire sections of a site, while the meta robots tag is used to control indexation and following of links on a page-by-page basis. Use robots.txt for broad instructions and meta robots tags for specific page-level directives. It’s important to note that robots.txt can prevent crawling, but not indexing. For preventing indexing, use the googlebot meta robots tag.

Testing and Monitoring Robots.txt

A: To test and monitor your robots.txt file:

- Use Google Search Console: It offers a robots.txt tester to check for errors and see which pages are blocked.

- Regular Audits: Conduct regular audits to ensure it aligns with your current SEO strategy.

- Monitor Traffic and Indexing: Keep an eye on your site’s traffic and indexing status to see if changes in robots.txt have any unintended consequences.

Creating a perfect robots.txt file for SEO involves a balance between allowing search engines to index your valuable content and preventing them from accessing areas that are not meant for public viewing or do not contribute to your SEO efforts. Here’s a step-by-step guide:

Step 1: Understand Your Website’s Structure

- Know Your Content: Identify which parts of your website are crucial for SEO and which are not. This includes understanding your site’s directories, file types, and the purpose of various sections.

Step 2: Specify User Agents

- Identify User Agents: Start your robots.txt file by specifying user agents (search engine crawlers). Use User-agent: * to apply the rules to all crawlers, or specify individual crawlers like User-agent: Googlebot for Google’s crawler.

Step 3: Set Allow and Disallow Directives

- Allow Important Content: Use Allow: to ensure search engines can index important parts of your site. This is particularly useful if you have a general disallow rule but want to make exceptions.

- Disallow Unnecessary Sections: Use Disallow: to prevent search engines from indexing certain parts of your site. Common examples include admin pages, private areas, or duplicate content.

Step 4: Manage Crawl Delay (Use Sparingly)

- Crawl Delay: If necessary, use Crawl-delay: to control the speed at which a crawler accesses your site. Be cautious with this, as setting it too high can hinder your site’s ability to be indexed efficiently.

Step 5: Add Sitemap Location

- Link Your Sitemap: Include the full URL of your sitemap by adding Sitemap: http://www.yoursite.com/sitemap.xml. This helps search engines find and index your content more efficiently.

Step 6: Avoid Common Mistakes

- Syntax Accuracy: Ensure your syntax is correct. Mistakes in the robots.txt file can lead to significant indexing issues.

- No Overblocking: Be careful not to disallow content that should be indexed.

- Regular Updates: Keep your robots.txt file updated with changes in your website’s structure and content strategy.

Step 7: Test Your Robots.txt File

- Use Testing Tools: Utilize tools like Google Search Console’s robots.txt Tester to check for errors and confirm that your directives work as intended.

Step 8: Monitor and Adjust

- Regular Monitoring: Regularly monitor your site’s performance in terms of crawl stats and indexing. Adjust your robots.txt file as needed based on these insights.

Example of a Simple Robots.txt File:

In this example, all user agents are disallowed from accessing the directories listed, while the sitemap location is clearly indicated.

Remember, the “perfect” robots.txt file varies for each website, depending on its unique structure and SEO goals. Regular review and adaptation are key to maintaining its effectiveness.

These FAQs should provide a comprehensive understanding of the role and optimization of robots.txt in SEO strategies.

Conclusion

The mastery of robots.txt is critical for SEO success, as it serves as a guide for search engine crawlers, directing them to the content that should be indexed and steering them away from areas that should not. Website owners rely on XML sitemap to enhance search engine ranking and improve user experience.

Ensuring the file is correctly formatted and structured, with clear ‘Allow’ and ‘Disallow’ directives, is essential, as even minor errors can have significant consequences for a site’s visibility.

It is vital to regularly test and refine these directives, using tools like Google Search Console, to maintain the integrity of a website’s SEO.

Additionally, the strategic inclusion of sitemap references within robots.txt can profoundly improve crawl efficiency by providing search engines with a detailed map of a site’s content.

Regular monitoring and updating of robots.txt and xml sitemap are necessary to adapt to new site developments, seasonal changes, and evolving search engine algorithms, ensuring the file continues to contribute to the site’s SEO performance.

In essence, a well-optimized robots.txt file is an invaluable asset for any website looking to secure and enhance its presence in the digital landscape.